Deep Reinforcement Learning

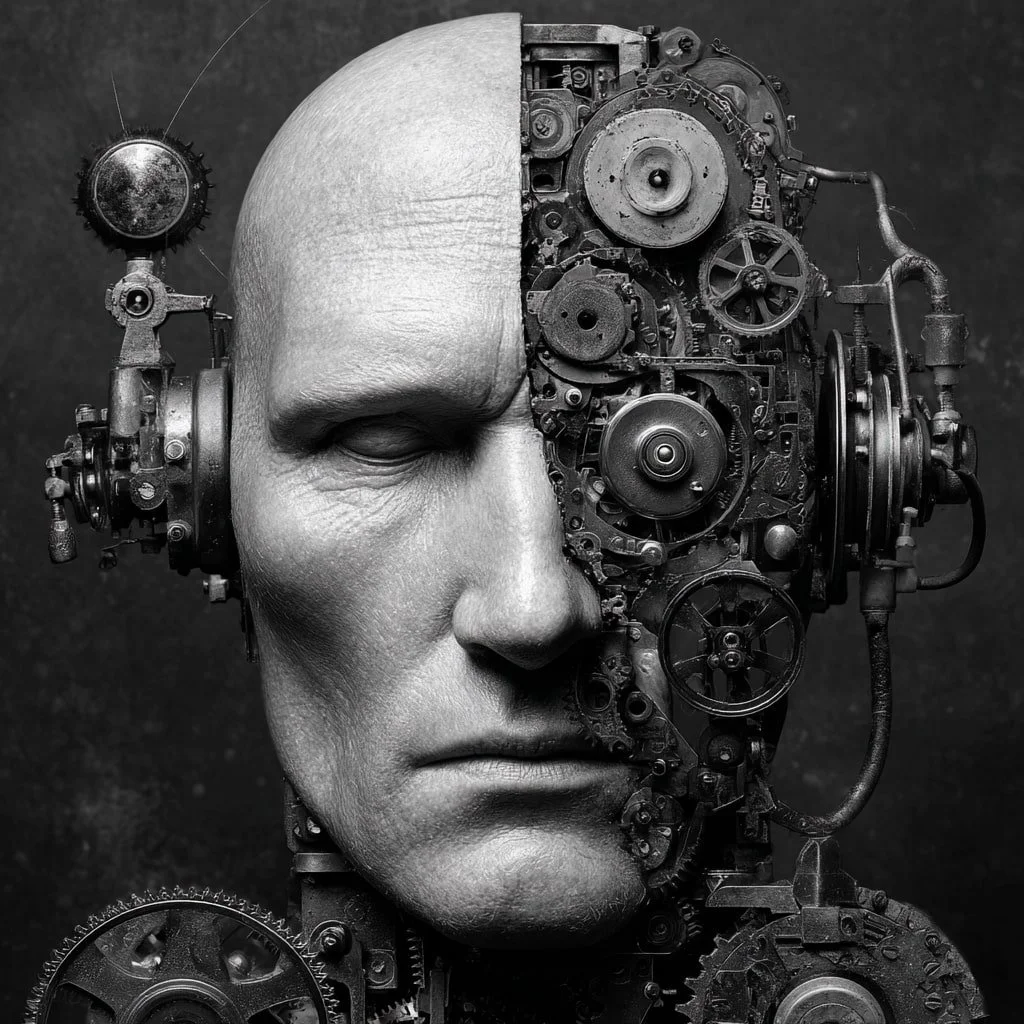

Image by Midjourney

Deep reinforcement learning (DRL) is a branch of machine learning that is concerned with teaching agents to take action in an environment in order to maximize a reward. The key difference between deep reinforcement learning and other types of machine learning is that deep reinforcement learning involves a process of trial and error, where the agent learns from its mistakes in order to optimize its behavior. In many ways, deep reinforcement learning is similar to the process of learning that humans undergo.

For instance, when we are first learning to drive a car, we make mistakes and have accidents. However, with time and experience, we learn how to avoid accidents and become better drivers. In the same way, deep reinforcement learning allows agents to gradually improve their behavior as they gain experience. One of the major benefits of deep reinforcement learning is that it can be used to solve complex tasks that are difficult for other machine learning algorithms. For instance, recent advancements in deep reinforcement learning have been used to teach agents how to play games such as chess and Go. As deep reinforcement learning continues to be developed, it is likely that it will be used to solve ever more complex tasks.

Deep reinforcement learning (DRL) is a powerful branch of machine learning that combines reinforcement learning principles with deep learning techniques, allowing agents to learn complex tasks through interaction with an environment. In DRL, agents take actions based on their current state, receive rewards or penalties, and adjust their behaviors to maximize cumulative rewards over time. Unlike supervised learning, which relies on labeled data, DRL learns through trial and error, continuously refining its strategy based on feedback from the environment.

Core Concepts and Components

Reinforcement Learning Basics

At its core, reinforcement learning (RL) involves an agent, an environment, actions, states, and rewards. The agent navigates the environment by taking actions, which change the state of the environment. Based on these actions, the agent receives a reward or penalty, which informs its future decisions. The goal is to learn a policy—a strategy that defines the best action to take in each state to maximize the total expected reward over time.Deep Learning Integration

The integration of deep learning allows RL algorithms to handle high-dimensional inputs, such as images or unstructured data, making it feasible to apply RL to tasks that were previously too complex. Neural networks, particularly deep neural networks (DNNs), serve as function approximators to map states to actions or to predict the value of taking a specific action in a given state. This capability enables the agent to generalize its learning to new, unseen states, enhancing its ability to solve complex tasks.Exploration vs. Exploitation

A central challenge in DRL is balancing exploration (trying new actions to discover their effects) and exploitation (using known actions that yield high rewards). Algorithms like Q-learning and policy gradient methods are used to find this balance, allowing the agent to effectively learn an optimal policy.

This animation shows a simplified deep reinforcement learning scenario, where an agent (white dot) learns to navigate a grid toward a goal (blue dot, flashing green when reached) by taking actions — up, down, left, or right — and receiving rewards. The faint white circles mark possible positions. Each episode begins with the agent at the top-left and ends when it reaches the goal, earning a positive reward; every step costs a small penalty to encourage efficiency. The log beneath records each action, position change, and reward, while the score tracks the agent’s average return over the last 20 episodes, providing a sense of how its performance is improving over time.

Applications of Deep Reinforcement Learning

Game Playing

DRL has gained significant attention due to its success in mastering games like chess, Go, and video games. Agents trained using DRL, such as AlphaGo and AlphaZero, have outperformed human champions by learning strategies that were previously thought to be impossible.Robotics

In robotics, DRL helps in teaching robots to perform complex manipulation tasks, navigate environments, or even collaborate with humans. The robots learn from their interactions, improving their performance over time without needing explicit programming.Healthcare

DRL is being explored for optimizing treatment plans, designing personalized medicine approaches, and assisting in medical imaging analysis by learning from vast amounts of data to make more accurate predictions.Finance

In financial markets, DRL can optimize trading strategies by continuously learning from market data and adjusting its actions to maximize profits while managing risks.

Challenges and Future Directions

While DRL has achieved impressive results, several challenges remain:

Sample Efficiency

DRL algorithms often require vast amounts of data and computational resources to learn effectively. This limitation is a significant barrier to applying DRL in real-world scenarios where data collection is expensive or impractical.Stability and Robustness

DRL models can be sensitive to hyperparameters and environmental changes, leading to instability during training. Researchers are actively working on developing more robust algorithms to address these issues.Interpretability

Understanding the decision-making process of DRL agents is challenging, especially in high-stakes applications like healthcare or autonomous driving, where transparency is crucial.

As research continues, the potential applications of DRL are expanding, moving beyond games and simulations to real-world problems across various domains. Its ability to learn complex behaviors from scratch makes it a promising approach for solving tasks that are difficult for traditional machine learning algorithms. The future of DRL will likely see advancements in efficiency, scalability, and interpretability, paving the way for broader adoption in practical, real-world applications.

-

The AI blog's definition of deep reinforcement learning provides a foundational introduction to the concept but falls significantly short of academic standards and comprehensive coverage expected for a technical terminology resource. While accessible to beginners, the definition contains several technical inaccuracies and oversimplifications that could mislead readers seeking precise understanding of this complex field.

Strengths of the Definition

Accessible Introduction

The definition succeeds in making deep reinforcement learning conceptually accessible by using the human learning analogy. The comparison to learning how to drive a car effectively communicates the trial-and-error nature of reinforcement learning to non-technical audiences.Core Concept Identification

The definition correctly identifies that deep reinforcement learning combines reinforcement learning with deep neural networks to enable agents to learn through environmental interaction and reward maximization. It appropriately emphasizes the iterative improvement aspect that distinguishes DRL from other machine learning approaches.Practical Applications

The mention of chess and Go as successful applications provides concrete examples of DRL's capabilities, though the coverage is extremely limited compared to the broad range of real-world applications documented in the literature.

Critical Technical Deficiencies

Incomplete Definition

The definition fails to explain what "deep" actually means in this context. Deep reinforcement learning specifically uses deep neural networks with multiple hidden layers to approximate complex functions like policies, value functions, or environment models. This fundamental distinction from traditional reinforcement learning is completely absent.Missing Key Components

The definition lacks explanation of essential DRL components identified across academic sources:Agent and Environment: While mentioned implicitly, these core components are not formally defined

State Representation: No discussion of how agents perceive and process environmental states

Policy Functions: Missing explanation of how agents decide which actions to take

Value Functions: No mention of how agents evaluate the quality of states or actions

Reward Systems: Oversimplified treatment of the feedback mechanism

Technical Inaccuracy

The statement that agents "learn from their mistakes in order to optimize their behavior" oversimplifies the learning process. DRL agents learn from both positive and negative experiences through sophisticated algorithms like Deep Q-Networks (DQN), policy gradients, and actor-critic methods. The learning process involves complex mathematical frameworks including the Bellman equation and temporal difference learning.

Missing Critical Context

Algorithmic Foundations

The definition provides no information about specific DRL algorithms. Key methods like DQN, which revolutionized the field by combining Q-learning with deep neural networks, are entirely absent. This omits crucial technical details that readers seeking comprehensive understanding would expect.Mathematical Framework

The definition lacks any mathematical foundation. Concepts like Markov Decision Processes (MDPs), which form the theoretical backbone of reinforcement learning, are not mentioned. The Bellman equation, fundamental to understanding how value functions work, is completely absent.Limitations and Challenges

The definition presents an overly optimistic view without acknowledging well-documented challenges:Sample Inefficiency: DRL algorithms often require millions of training episodes

Training Instability: Deep neural networks can suffer from convergence issues

Generalization Problems: Agents may struggle to transfer learned behaviors to new environments

Reward Function Design: Creating appropriate reward structures remains challenging

Historical Context

The definition lacks important historical perspective. The breakthrough achievements of AlphaGo and AlphaZero, which demonstrated DRL's potential in complex strategic games, are mentioned only briefly without explaining their significance to the field's development.

Comparative Analysis with Academic Standards

Academic and industry sources consistently provide more comprehensive definitions. For example, authoritative sources define DRL as "a subfield of machine learning that combines principles of reinforcement learning (RL) and deep learning" while explaining how it "uses deep neural networks to represent policies, value functions, or environment models".

The blog's definition also lacks the precision found in technical literature regarding the distinction between reinforcement learning and deep reinforcement learning. Academic sources clearly explain that traditional RL uses tabular or linear function approximation, while DRL employs deep neural networks to handle high-dimensional input spaces.

Recommendations for Improvement

Technical Accuracy

The definition should be revised to include proper technical terminology and accurate descriptions of core concepts. Key terms like "agent," "environment," "policy," and "value function" should be explicitly defined.Comprehensive Coverage

Expand the definition to include major algorithmic approaches (DQN, policy gradients, actor-critic methods), mathematical foundations (MDPs, Bellman equation), and practical considerations (exploration vs. exploitation trade-offs).Balanced Perspective

Include discussion of current limitations and challenges alongside successes. This provides readers with realistic expectations about the technology's capabilities and constraints.Current Applications

Expand beyond gaming examples to include diverse real-world applications in robotics, healthcare, finance, and autonomous systems, which demonstrate DRL's broader impact.

In Summary

While the AI blog's definition serves as an adequate starting point for complete beginners, it falls short of the comprehensive, technically accurate treatment expected from a terminology resource. The definition would benefit from substantial revision to include proper technical foundations, acknowledge current limitations, and provide the depth of coverage that matches the complexity and importance of deep reinforcement learning in modern AI research and applications.

For readers seeking authoritative understanding of deep reinforcement learning, consulting academic sources and comprehensive technical references would provide more complete and accurate information than this blog definition currently offers.

-

This review evaluates the AI Blog’s definition of “Deep Reinforcement Learning” for accuracy and clarity. Overall, the explanation is conceptually sound and presented in an accessible manner. It effectively communicates what deep reinforcement learning (DRL) is, using simple language and examples. Below, we outline key strengths and a few weaknesses in how the term is defined for a general audience.

Strengths

Accurate Core Definition

The blog correctly defines deep reinforcement learning as a branch of machine learning where an agent learns by interacting with an environment to maximize a reward . This captures the essence of reinforcement learning (learning through trial and error to maximize cumulative rewards) and aligns with standard expert definitions . The explanation emphasizes that the agent improves its behavior over time by learning from mistakes , which is conceptually accurate.Clear, Relatable Language

The definition is written in plain language that non-experts can follow. For instance, it likens deep reinforcement learning to how humans learn new skills: “when we are first learning to drive a car, we make mistakes…with time and experience, we learn… In the same way, deep reinforcement learning allows agents to gradually improve their behavior as they gain experience.” This driving analogy makes the trial-and-error learning process intuitive. Terms like “mistakes,” “experience,” and “reward” are used in their everyday sense, which helps demystify the concept for readers.Highlights Key Distinction

The explanation clearly differentiates DRL from other forms of machine learning. It notes that unlike traditional supervised learning (which uses labeled data), DRL relies on trial and error and feedback from the environment . This is a crucial point, as it underscores that in reinforcement learning the system learns by exploring actions and receiving rewards or penalties, rather than being explicitly taught with examples. By making this distinction, the article helps readers understand why deep reinforcement learning is unique within AI.Use of Examples and Achievements

The article strengthens understanding by citing well-known successes of deep RL. It mentions that DRL has been used to teach agents to play complex games like chess and Go, achieving superhuman performance (e.g. referencing AlphaGo) . These concrete examples give readers a real-world context. Many readers might recall news about AI mastering Go or video games, so this connection makes the definition more tangible and memorable. It also notes that DRL can tackle tasks “difficult for other machine learning algorithms” , reinforcing why the technique is important.Thorough yet Approachable

The definition doesn’t stop at a one-liner – it is part of a broader explanation that covers core concepts and even applications. After the initial definition, the blog post gently introduces foundational terms (agent, state, action, reward) and even explains how deep learning is integrated into reinforcement learning . For readers with more interest, these details illustrate that “deep” RL combines neural networks with reinforcement learning principles, enabling the handling of complex inputs . Despite touching on such technical aspects, the text maintains an approachable tone. Complex ideas (like the exploration-exploitation trade-off or use of neural networks) are briefly explained in simple terms , so an interested reader gains insight without feeling lost. This layered approach – a basic definition, a human analogy, then deeper concepts – is a strong point, as it caters to both casual readers and those seeking more depth.

Weaknesses

“Deep” Aspect Not Immediate

One minor critique is that the initial definition doesn’t explicitly explain what makes deep reinforcement learning “deep.” It introduces DRL as a branch of ML about agents learning via trial and error – which is correct, but essentially describes reinforcement learning in general. The role of deep learning (i.e. using deep neural networks) is mentioned later in the article , rather than in the first overview sentence. A curious reader might wonder at first “why is it called deep reinforcement learning?” The article does answer this, explaining that deep neural networks allow the technique to handle complex inputs and tasks , but only after the initial paragraphs. While this staged explanation helps keep the opener simple, explicitly hinting at the deep-neural-network aspect up front could have made the definition immediately more precise.Assumes Some Terminology

The explanation uses a few technical terms without initial definition, which could momentarily confuse readers unfamiliar with AI jargon. For example, terms like “agents” and “environment” appear in the first sentence . Although their meaning can be inferred (and the reinforcement learning basics section later defines them ), absolute beginners might not know these terms in an AI context. Similarly, the later portions reference concepts like “Q-learning” and “policy gradient methods” or “function approximators” . These specific terms likely won’t be fully understood by a general audience (they are more for readers with a deeper interest). The good news is that understanding those details isn’t necessary to grasp the main idea – the core message (learning through feedback to maximize reward) still comes across. But it’s worth noting that a few parts of the explanation dip into technical language that maybe 20% of readers (the more tech-savvy segment) will appreciate more than the rest.Broad Analogy (Potential Over-Simplification)

The human-learning analogy (learning to drive by making mistakes) is very helpful, but it does simplify the concept. Real human learning is of course more complex than an AI agent optimizing a reward signal. The article’s phrasing “in many ways, deep reinforcement learning is similar to the process of learning that humans undergo” is a fair simplification for clarity. However, a reader might over-generalize this to think AI learns just like humans. The intended point is that both improve through trial and error, which is true. This “strength-as-weakness” is minor, since the analogy is clearly meant to illustrate the trial-and-error mechanism. Most readers will understand it’s a simplification, but it’s something to keep in mind: analogies, while accessible, trade off some precision.

In summary, the AI Blog’s definition of deep reinforcement learning is conceptually accurate and communicates the intended meaning well. It effectively captures what DRL involves (agents learning by trial-and-error to maximize rewards) and why it’s noteworthy (ability to solve complex tasks, inspired by successes in games and other fields). The explanation is largely accessible to non-specialists: it uses everyday language and examples, with a logical flow from basic idea to more detailed aspects. A few technical terms appear along the way, but they do not obstruct the general understanding for the majority of readers. Overall, the definition’s strength lies in its clarity and real-world illustrations, making a complex AI concept understandable to about 80% of the general audience with an interest in AI, which is exactly the goal . The minor gaps (like not immediately defining “deep” or sporadic jargon) are relatively small issues in an otherwise strong and reader-friendly explanation.

-

The terminology page on Deep Reinforcement Learning (DRL) from the AI blog provides a solid, accessible introduction to the concept, blending foundational explanations with practical insights. Aimed at a general audience interested in AI, the definition effectively positions DRL as a fusion of reinforcement learning's trial-and-error mechanics and deep learning's neural network capabilities, emphasizing how agents learn to maximize rewards in dynamic environments.

Strengths

Clarity and Readability

The explanation is straightforward and jargon-light, making it beginner-friendly. For instance, it draws a relatable analogy to human learning from mistakes, which helps demystify the abstract process of agents interacting with states, actions, and rewards. Key concepts like exploration vs. exploitation are introduced concisely without overwhelming the reader.Structure and Completeness

The page is well-organized, covering essentials such as key components (e.g., agents, environments, and deep neural networks), operational mechanics (trial-and-error with feedback), and a balanced discussion of applications. It includes diverse real-world examples, from game-playing AI like AlphaGo and AlphaZero to robotics, healthcare, and finance, illustrating DRL's versatility and impact.Accuracy

Based on established AI knowledge, the content is factually sound. It correctly highlights DRL's ability to handle high-dimensional data (e.g., images) through deep networks and differentiates it from supervised learning by stressing the role of continuous environmental feedback.

Weaknesses

Depth Limitations

While suitable for an introductory definition, it skimps on technical details, such as specific algorithms (e.g., Deep Q-Networks or Actor-Critic methods) or mathematical underpinnings like value functions and policy gradients. This might leave intermediate learners wanting more rigor.Omission of Challenges

The page glosses over common DRL pitfalls, including training instability, high computational demands, or the need for vast amounts of data (sample inefficiency). Including a brief note on these could provide a more balanced view, especially for readers considering practical implementations.Visual Aids

There's no mention of diagrams, flowcharts, or visuals in the summary, which could enhance understanding of agent-environment interactions, a missed opportunity for a blog format.

Overall, this definition earns a strong 8/10 rating. It's an excellent starting point for newcomers to AI terminology, offering value through its brevity and applicability focus. For those seeking deeper dives, it could serve as a gateway to more advanced resources, but it stands out as a reliable, engaging entry in the blog's terminology series. If you're exploring AI basics, this page is worth a read.