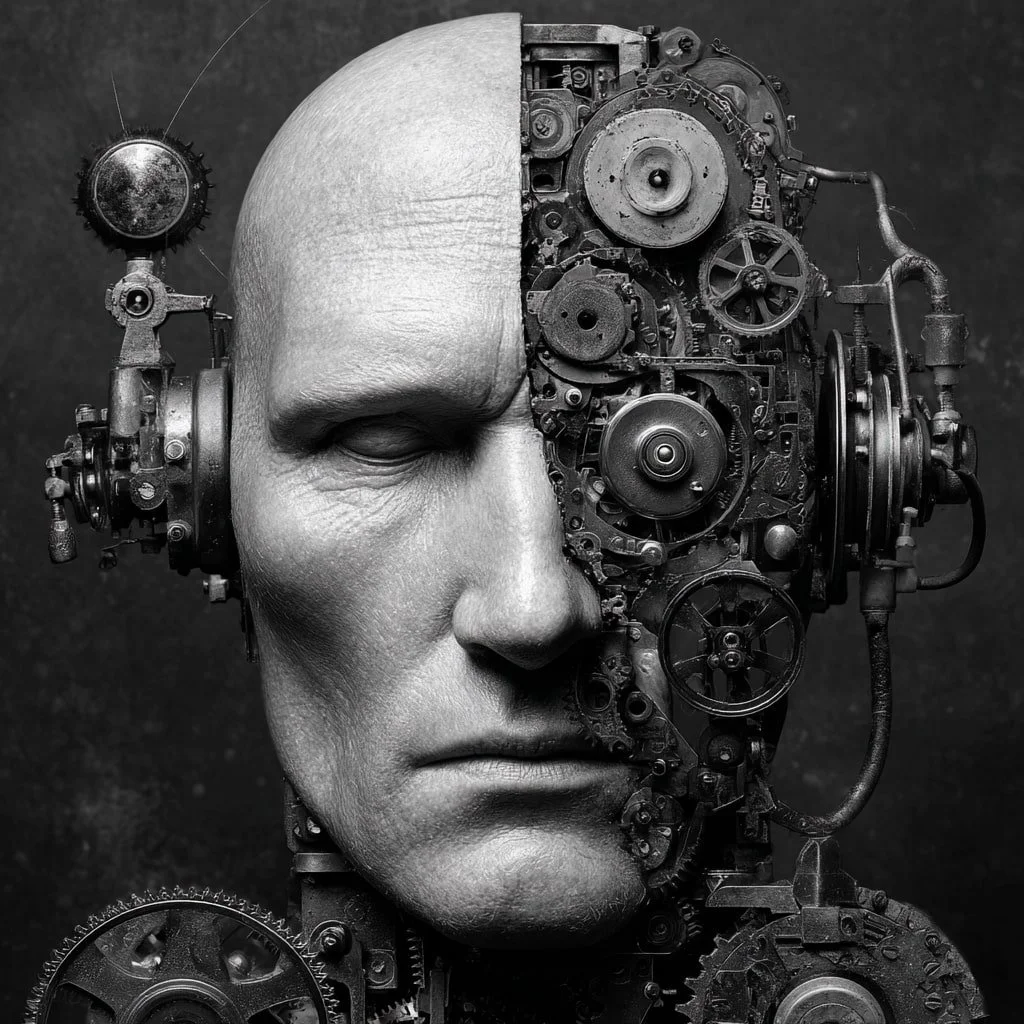

Deep Learning (DL)

Image by Midjourney

Deep learning is a branch of machine learning (a subset of artificial intelligence) that uses artificial neural networks with many layers to learn complex patterns in data. Inspired by the structure and function of the human brain (giving rise to human intelligence), these networks are composed of interconnected nodes (neurons) organized into an input layer, multiple hidden layers, and an output layer. Each neuron applies mathematical weights, biases, and activation functions to its inputs, allowing the network to transform raw data into increasingly abstract and useful representations. The term “deep” refers to the number of layers; more layers generally allow the network to capture more complex relationships.

Deep learning models excel at tasks such as image recognition, speech transcription, natural language processing, recommender systems, and autonomous navigation. They are trained on large datasets using optimization algorithms like stochastic gradient descent with backpropagation, adjusting parameters to minimize error. Learning can be supervised, semi-supervised, or unsupervised, depending on the availability of labeled data.

How Deep Learning Works

Feature Extraction … early layers detect low-level features (edges, colors, textures).

Abstraction … deeper layers combine these features into higher-level concepts (faces, words, objects).

Prediction … the final layer produces an output, such as a classification label or numerical prediction.

A Brief History

The foundations of deep learning trace back to neural network research in the 1940s, though progress was limited by the computing power and data available at the time. In 2006, Geoffrey Hinton, Ruslan Salakhutdinov, and Dmitry Alexeev popularized deep belief networks, reviving interest in training deep architectures. The major breakthrough came in 2012 when Hinton’s students Alex Krizhevsky and Ilya Sutskever developed AlexNet, a deep convolutional neural network that achieved a dramatic win in the ImageNet competition, igniting the modern deep learning boom. In 2014, Ian Goodfellow, Yoshua Bengio, and Aaron Courville published the influential Deep Learning textbook, formalizing the field. Neural networks use layers of low- and high-level feature detectors to filter input data, with the number of layers depending on the task. For example, facial recognition often requires more layers than audio processing. The term “deep” refers to the number of layers; a system with 10 layers is considered shallow compared to one with 100. Capsule networks, introduced in the mid-2010s, aimed to better model spatial relationships between objects in images, further improving accuracy. Today, deep learning powers applications from autonomous vehicles and medical diagnosis to fraud detection, product recommendations, speech recognition, translation, and robotics, with its influence continuing to grow across industries.

A tiny neural network learns to separate a two-moons dataset in real time: you see hundreds of colored points forming two interlocking crescents while a soft, semi-transparent field shows the model’s current estimate of “probability of class 1,” shifting from blue to pink as training progresses. Subtle cross-hair axes give orientation, and each epoch updates the field, gradually bending the decision boundary to hug the moons’ shapes. A compact HUD displays epoch, loss, and learning rate; controls let you pause, single-step, reset, or reshuffle to a new random seed. The run ends automatically after a set number of epochs or once the loss drops below a target, so you watch the decision surface converge rather than loop forever.

Strengths and Limitations

Advantages

State-of-the-art accuracy in vision, speech, and language tasks

Automatically learns features from raw data without manual engineering

Scales well with large datasets and high-performance hardware

Challenges

Requires massive labeled datasets for best results

High computational and energy costs

Often operates as a “black box” with limited interpretability

Applications and Future Outlook

Deep learning now powers virtual assistants, autonomous vehicles, fraud detection systems, medical imaging diagnostics, translation tools, and generative AI. Future research is focused on making models more efficient, more interpretable, and less dependent on massive labeled datasets, opening the door for more accessible and trustworthy AI systems.

The Google Trends data shows that interest in “deep learning” was minimal from 2010 until around 2014, after which it began a steady rise, peaking in the late 2010s following major breakthroughs like AlexNet. From 2019 to early 2023, interest remained relatively stable with moderate fluctuations, then began climbing again in late 2023. By April 2025, interest had surged sharply, more than doubling in a short time and approaching new all-time highs.

TensorFlow can be used to build custom neural networks for tasks such as image recognition or natural language processing.